Dealing With Missing Values, Part 2.

Multivariate Imputation by Chained Equations, Indicator Variable Techniques, and Domain-Specific Rules

In our previous post, we introduced the topic of dealing with missing variables in Data Science and Machine Learning. The topic turned out to be much larger than what I had originally thought when deciding to rewrite a blog post I wrote years ago, so I decided to split it in several parts. The more I think about it, though, the more I am convinced that only a short book on the topic would actually do it full justice.

Multivariate Imputation by Chained Equations (MICE)

Multivariate Imputation by Chained Equations (MICE) is a sophisticated approach for handling missing data, especially effective when the missing values follow a complex pattern under the Missing At Random (MAR) assumption. Unlike simpler methods that handle each feature independently, MICE iteratively models each feature based on the others, capturing the inherent relationships within your dataset.

The MICE process works by first filling missing values with initial estimates, often simple ones like mean or median values. It then iteratively refines these estimates by modeling each feature with regression techniques, conditional on the others. This approach allows MICE to accurately preserve multivariate relationships and provide uncertainty estimates for the imputed values.

However, MICE requires careful tuning. It involves deciding on the number of iterations to run and handling the computational complexity that arises from modeling each feature iteratively.

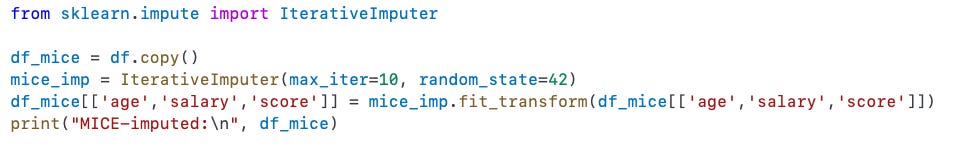

Here is how you can implement MICE using Python's scikit-learn:

Indicator Variable Techniques

Sometimes, the pattern of missing data itself can carry valuable information. In such scenarios, indicator variable techniques explicitly record the presence or absence of data as separate binary indicators. This method ensures that the imputation process and downstream models can learn directly from patterns of missingness.

Indicator variables help algorithms distinguish between originally observed and imputed values, which can significantly improve the performance of predictive models. However, this technique also increases the dimensionality of the dataset, potentially leading to overfitting, especially in cases where missing data is sparse.

A common practice is to combine indicator variables with a straightforward imputation strategy like mean or mode imputation, as illustrated below:

Domain-Specific Rules

Domain-specific imputation leverages expert knowledge or external contextual data to fill missing values. This method is highly effective when you have clear business logic or contextual insights guiding the imputation process.

For example, if you know from domain knowledge that all employees in the engineering department earn at least $70,000 annually, then this information should directly inform how you handle missing values in the "Salary" column. Using domain-specific rules can improve the validity of your imputation significantly over general statistical approaches.

Here's a practical example of applying domain-specific imputation:

This concludes the second part of this series. In the last part we’ll tackle probabilistic methods, interpolation, and tree-based methods..