Dealing With Missing Values, Part 1.

A semi-comprehensive look at all the ways we deal with missing values in Data Science and Machine Learning

No real world data collection process is perfect, and we are often left with all sorts of noise in our dataset: incorrectly recorded values, non-recorded values, corruption of data, etc. If we are able to spot all those irregular points, oftentimes the best we can do is treat them as missing values. Missing values are the fact of life if you work in data science, machine learning, or any other field that relies on the real-world data. Most of us hardly give those data points much thought, and when we do we rely on many ready-made tools, algorithms, or rules of thumb to deal with them. However, to do them proper justice you sometimes need to dig deeper, and make a judicious choice of what to do with them. And what you end up doing with them, like in many other circumstances in data science, can be boiled down to the trusted old phrase of “it depends”. Missing data can significantly impact the results of analyses and models, potentially leading to biased or misleading outcomes.

Many years ago I wrote a corporate blog post on this topic. The experience of writing that post taught me many valuable lessons about blogging - that blog post had to go through way too many chains of command before it saw the light of day, and eventually, like most other things about that startup, it disappeared. I’ve decided to revisit this topic, and try to do it even more justice this time around. And as I embarked on that journey, I soon realized that a single post will not do. So here is the first part in what I expect to be a three part series.

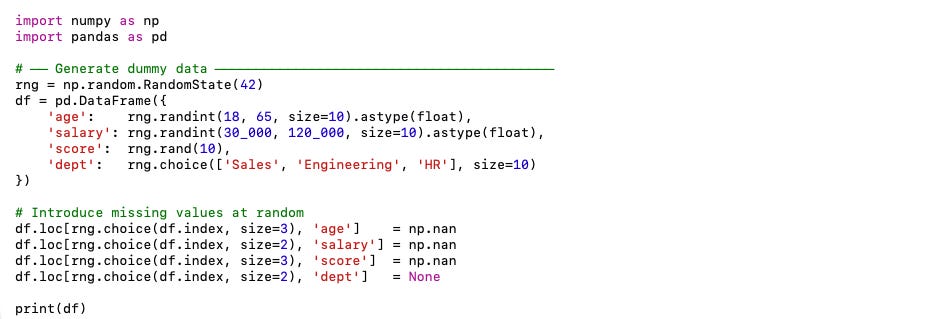

Let’s start by creating a dummy dataframe into which we can randomly insert various missing values.

Deletion-Based Methods

Deletion-based methods handle missing data by completely removing rows or columns that contain missing values. This approach is straightforward and easy to apply, requiring no additional parameters or complex adjustments. However, it should only be used under specific circumstances.

Deletion-based methods are most suitable when the missing data occur completely at random (MCAR). In other words, the absence of certain data points must be entirely unrelated to any observable or hidden variables. Additionally, these methods are practical only when dealing with large datasets, where losing some data points or variables will not significantly affect the overall dataset or analysis results.

The primary advantage of deletion-based methods is their simplicity. However, a notable drawback is the potential for significant data loss, particularly in datasets with many missing values. If the assumption of MCAR does not hold, results can become biased due to selective data removal.

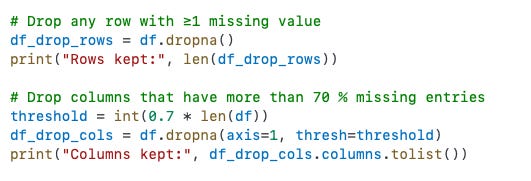

Here is an example using Python and the Pandas library:

Simple Imputation (Mean/Median/Mode)

Simple imputation involves replacing missing values with basic statistical measures such as the mean, median, or mode of existing data. This method is ideal when dealing with moderate levels of missingness, providing a quick and straightforward solution.

Mean imputation is typically applied to numeric data that is approximately normally distributed, while median imputation is recommended for skewed numeric data due to its robustness against outliers. Mode imputation is most commonly used for categorical variables, replacing missing entries with the most frequently occurring category.

The advantages of simple imputation include its speed and ease of implementation. Nevertheless, this approach can significantly underestimate data variance, potentially introducing biases into subsequent analyses or models.

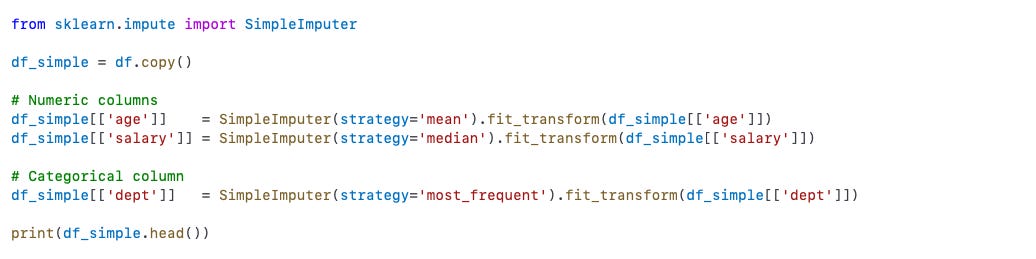

The following Python code demonstrates simple imputation:

KNN & Regression-Based Imputation

More sophisticated methods for handling missing data include K-Nearest Neighbors (KNN) and regression-based imputation. These methods leverage relationships and patterns in the data to estimate missing values more accurately than simple statistical methods.

KNN imputation predicts missing values based on the closest neighboring data points. It is particularly useful when preserving the local structure of data is crucial, and linear assumptions may not hold. Regression-based imputation, on the other hand, predicts missing values using linear relationships among features, assuming the data exhibits linearity.

The advantage of these advanced methods is their potential for increased accuracy compared to simple imputation. However, they tend to be computationally more intensive. Additionally, these methods can introduce issues such as over-smoothing of data and potential leakage of target information if predictive models inadvertently include target-related features.

The following examples illustrate these techniques in Python:

This concludes the first part of this series. In the next two parts we’ll tackle some more advanced techniques.